Lec 7. Application & Tips

📖 Learning rate

- 데이터를 통해 model 생성 시 핵심 요소

- Learning rate와 Gradient를 이용해 최적의 학습 모델 값 찾음 (cost가 최소가 되는 지점)

- Hyper parameter (사용자가 직접 세팅해 주는 값) 중 하나

- 어떤 optimizer를 통해 적용할지 선언할 때 learning_rate도 함께 지정함 [GradientDescentOptimizer(learning_rate= x.xx)]

- 적절하지 못한 크기의 learning rate (Step크기)는 최저 값을 얻기 어려움

→ 너무 클 경우: overshooting (High) 발생 가능

→ 너무 작은 경우: 시간 소요, local minimum에서 멈춤

∴ 적절한 크기의 learning rate 필요 (평균적으로 learning rate = 0.01 로 맞춘 후 조정해나감)

📖 Learning rate Decay 기법

- Cost가 일정 값에서 더 이상 변동 없을 때마다, learning rate 값을 조절해줌으로써 cost가 최소화 되게끔 함

- 주로 처음 시작할 때 learning rate값을 크게 준 후, 일정 epoch 주기로 값을 감소시킴

→ 최적의 학습에 도달하기까지의 시간 단축 가능구현 방식

- Step decay

: N번의 epoch 주기로 learning rate 조절

즉, 특정 step(epoch 구간)마다 조정 - Exponential decay

: exponential 하게 learning rate 감소

- 1/t decays

: 각 1/epoch로 조절

📖 Data preprocessing

- Feature Scaling 기법

밀집 분포 지역을 제외한 곳에 존재하는 outlier data들을 제거해줌 → 주요 데이터에 집중해서 학습 성능 향상

(1) Standardization(표준화)

: 평균에서부터 얼마나 떨어졌는지 판단

(2) Normalization (정규화)

: 0 ~ 1 범위에서 data 분포를 나타낼 수 있음

- Noisy Data

- 쓸모 없는 데이터(Noisy Data)들을 제거해, 학습에 유용한 data만 남기는 과정이 preprocessing

- Numeric, NLP(자연어 처리), Face Image 등에서 정확한 모델을 만들기 위해 필요

📖 Overfitting

일종의 과적합 상태. 주어진 학습 data에 대해 Validation, accuracy값이 높게 나옴에도 불구하고, 실제 data에 적용할 때 외려 정확도 감소

- High bias (underfit) : 학습이 덜 된 상태

- High variance (overfit) : 주어진 학습 data에 과하게 적합해 다른 data에도 일반적으로 사용되기 어려운 상태 (변화량이 많음)

해결 방법

- 학습 시 더 많은 training data 제공

- Set a features

b. feature 수 줄이기 : 일종의 차원 축소(PCA)

c. feature 수 증가 : fitting이 덜 된 모델을 구체화 하기위해 사용

→ 적절한 정도의 feature로 조정 필요

- Regularization (정규화)

cost 함수 뒤에 regularization관련 term 추가한 형태

- Regularization strength (λ)

: 0 ~ 1 사이 상수 값. 0에 가까울수록 regularization 중요도 작음

실습 코드

# 사용할 학습 data 선언 : 800 대 data + 일부 outlier로 구성

xy = np.array([[828.659973, 833.450012, 908100, 828.349976, 831.659973],

[823.02002, 828.070007, 1828100, 821.655029, 828.070007],

[819.929993, 824.400024, 1438100, 818.97998, 824.159973],

[816, 820.958984, 1008100, 815.48999, 819.23999],

[819.359985, 823, 1188100, 818.469971, 818.97998],

[819, 823, 1198100, 816, 820.450012],

[811.700012, 815.25, 1098100, 809.780029, 813.669983],

[809.51001, 816.659973, 1398100, 804.539978, 809.559998]])

x_train = xy[:, 0:-1]

y_train = xy[:, [-1]]

#정규화 함수 - 0 ~ 1 사이 값으로 scaling

def normalization(data):

numerator = data - np.min(data, 0)

denominator = np.max(data, 0) - np.min(data, 0)

return numerator / denominator

#학습 data preprocessing (정규화 함수 사용) 해서 xy데이터 갱신

xy = normalization(xy)

#학습 데이터 갱신

x_train = xy[:, 0:-1]

y_train = xy[:, [-1]]정규화한 Data로 Linear Regression 모델 생성할 함수 정의

# dataset 선언

dataset = tf.data.Dataset.from_tensor_slices((x_train, y_train)).batch(len(x_train))

# Weight, bias 값 선언

W = tf.Variable(tf.random.normal((4, 1)), dtype=tf.float32)

b = tf.Variable(tf.random.normal((1,)), dtype=tf.float32)

# hypothesis 정의 (y = Wx + b)

def linearReg_fn(features):

hypothesis = tf.matmul(features, W) + b

return hypothesis

# L2 loss 함수 - overfitting 해결

def l2_loss(loss, beta = 0.01): #beta => 람다값

W_reg = tf.nn.l2_loss(W) # output = sum(t ** 2) / 2

loss = tf.reduce_mean(loss + W_reg * beta) #정규화된 loss값 계산

return loss

# hypothesis 검증할 Cost 함수 정의

def loss_fn(hypothesis, features, labels, flag = False):

cost = tf.reduce_mean(tf.square(hypothesis - labels)) #(가설-y값)을 최소화

if(flag): #flag를 통해 L2 loss 적용 여부 판별

cost = l2_loss(cost)

return cost

학습 진행을 위해 Learning rate 설정 - learning decay

is_decay = True # dacay 기법 적용 유무

starter_learning_rate = 0.1

#최적의 learning rate 찾기

if(is_decay):

# Exponential decay 기법 사용헤 epoch 50번마다 learning rate 조절

learning_rate = tf.keras.optimizers.schedules.ExponentialDecay(initial_learning_rate=starter_learning_rate,

decay_steps=50,

decay_rate=0.96,

staircase=True)

optimizer = tf.keras.optimizers.SGD(learning_rate)

else:

optimizer = tf.keras.optimizers.SGD(learning_rate=starter_learning_rate)

# loss값(|가설 - 실제값|)구하며 l2 loss 적용 여부 따져서 loss값 도출

def grad(hypothesis, features, labels, l2_flag):

with tf.GradientTape() as tape:

loss_value = loss_fn(linearReg_fn(features),features,labels, l2_flag)

return tape.gradient(loss_value, [W,b]), loss_value #gradient, loss값 출력

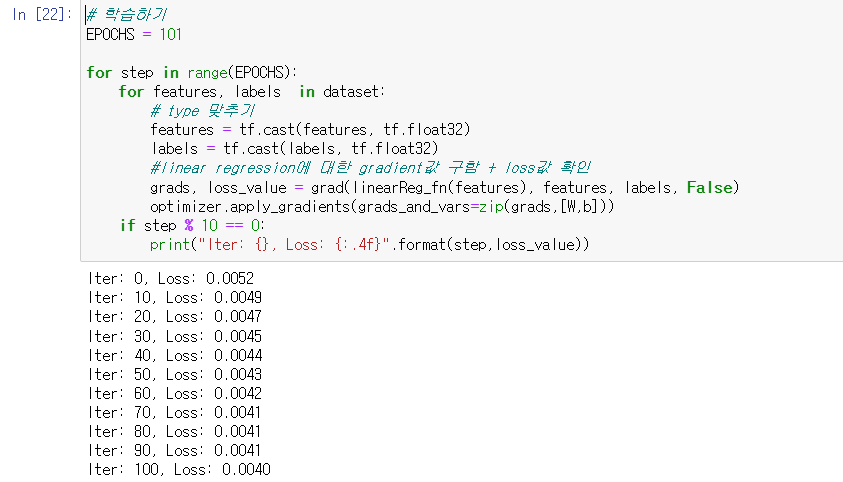

# 학습하기

EPOCHS = 101

for step in range(EPOCHS):

for features, labels in dataset:

# type 맞추기

features = tf.cast(features, tf.float32)

labels = tf.cast(labels, tf.float32)

#linear regression에 대한 gradient값 구함 + loss값 확인

grads, loss_value = grad(linearReg_fn(features), features, labels, False)

optimizer.apply_gradients(grads_and_vars=zip(grads,[W,b]))

if step % 10 == 0:

print("Iter: {}, Loss: {:.4f}".format(step,loss_value))

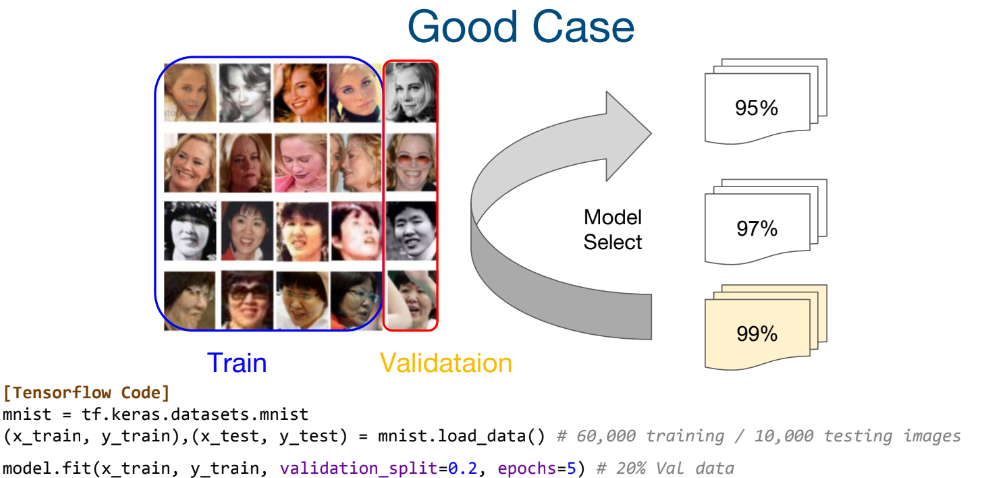

📖Data sets

- 학습에서 사용되는 data 종류

- Training data (학습 데이터)

- Validation data (평가 데이터)

- Testing data (테스트 데이터)

- Traing , Validation data 구성이 중요함

- 모델 성능을 올리기 위한 후처리 필요

- hyperparameter 설정, layer 구성을 통해 향상 가능

- 동일한 데이터를 통해 반복적으로 테스트 후 모델 생성 과정 반복 수행하며 성능 높임

Evaluating a hypothesis

: 모델이 선택 된 후 새로운 데이터로 모델 테스트 가능

- 학습용 데이터 + 테스트용 데이터로 구성

- x값 = y값 같은지 확인하는 과정 필요

Anomaly detection

Healthy Data로 학습 후 모델 생성 → Unseen Data로 model을 돌리며 이상 케이스 발생 감지

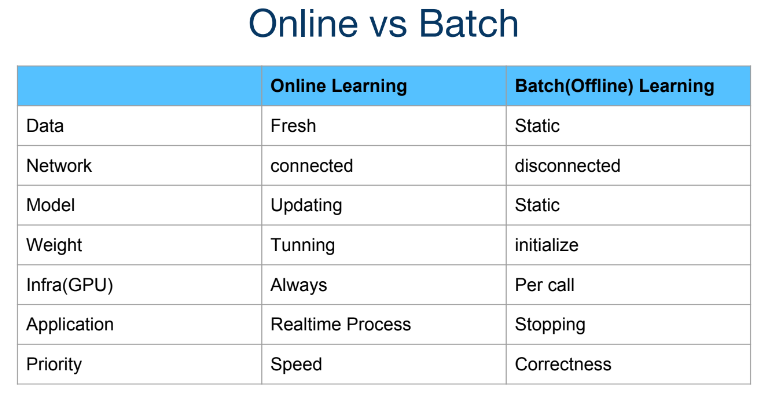

📖Learning 방법

Online Learning

- 데이터가 인터넷에 연결된 상태. 지속적으로 변경되는 상황에서 학습 진행

- 속도 중요

Batch Learning (Offline)

- 데이터가 인터넷에 연결되지 않은 상태(static). 데이터가 변하지 않는 상황에서 학습 진행

- 정확도 중요

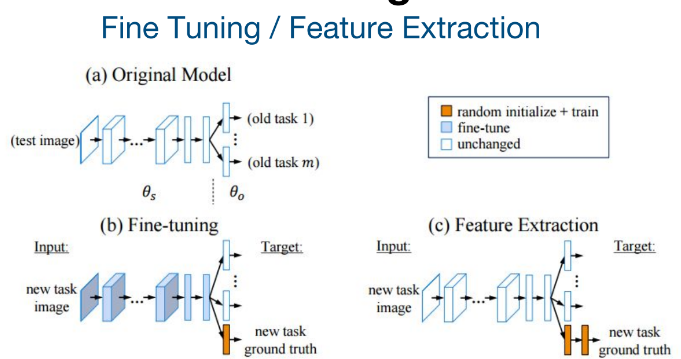

Fine Tunning

- 기존 Data(A)를 가지고 학습 시킨 모델에 대해 기존 weight 값 미세하게 조절

→ 새로운 다른 종류의 Data로 다시 한번 가중치 세밀하게 조정하며 학습 (기존 데이터는 기존대로 분류 유지)

Feature Extraction

- 기존 Data(A)를 가지고 학습 시킨 모델에 대해, 새로운 Data (B, C)를 별도의 Task 취급해 이것들에 대해서만 새롭게 학습시킴

(→ 기존 weight 조절 X, 새로운 layer 추가 후 이것을 학습하고 최종결과를 내도록 함)

Efficient Models - 효과적인 모델 생성

결과적으로 inference time 자체를 최소화 하는 것이 필요 (많은 양의 data 처리는 필연적이므로)

→ inference에 영향 미치는 weight 값 줄여야 함

실습 코드

(1) Fashion MNIST - Image Classification

# keras 제공 라이브러리 Fashion MNIST 사용

fashion_mnist = tf.keras.datasets.fashion_mnist

# train data, test dat 가져와 set으로 저장

(train_images, train_labels), (test_images, test_labels) = fashion_mnist.load_data()

#class 10가지 존재 (0 ~ 9사이 값과 매칭)

class_names = ['T-shirt/top', 'Trouser', 'Pullover', 'Dress', 'Coat',

'Sandal', 'Shirt', 'Sneaker', 'Bag', 'Ankle boot']

# 0 ~ 1사이로 정규화

train_images = train_images / 255.0

test_images = test_images / 255.0

# keras의 모델 정의

model = keras.Sequential([

keras.layers.Flatten(input_shape=(28, 28)), #입력 데이터 크기 맞춰줌

keras.layers.Dense(128, activation=tf.nn.relu), #128개의 layer로 선언

keras.layers.Dense(10, activation=tf.nn.softmax) #10개의 class로 구분

])

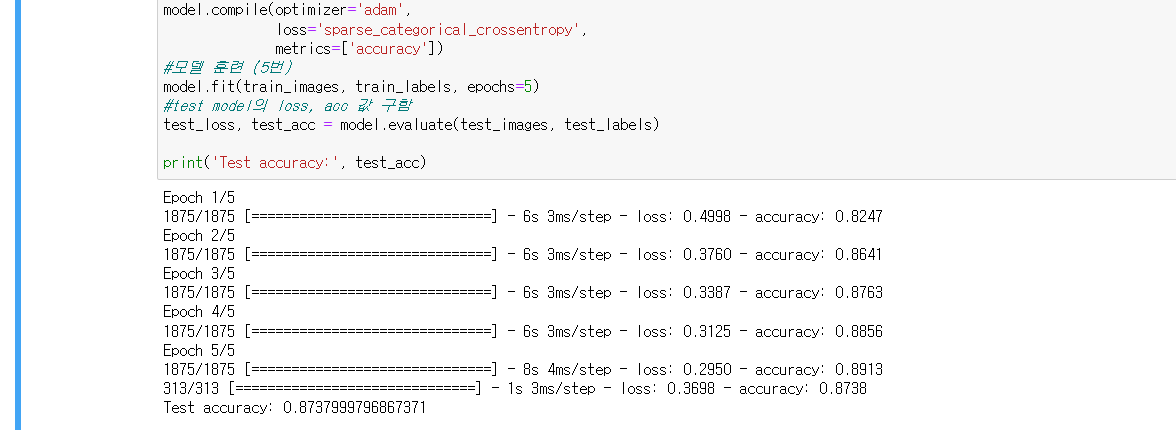

#정의한 모델 컴파일 - optimizer선언, loss값 정의, 정확도 측정 방식 결정

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

#모델 훈련 (5번)

model.fit(train_images, train_labels, epochs=5)

#test model의 loss, acc 값 구함

test_loss, test_acc = model.evaluate(test_images, test_labels)

print('Test accuracy:', test_acc)

(2) IMDB - Text Classification

imdb = keras.datasets.imdb

#학습 위한 data 구성 (train, test)

(train_data, train_labels), (test_data, test_labels) = imdb.load_data(num_words=10000)

word_index = imdb.get_word_index()

# data 중 자연여 전처리 과정 (기존 이미지는 255(크기)로 나눠 정규화)

word_index = {k:(v+3) for k,v in word_index.items()}

word_index["<PAD>"] = 0 #공백에 대한 값

word_index["<START>"] = 1 #시작 값

word_index["<UNK>"] = 2 # unknown - 모르는 단어

word_index["<UNUSED>"] = 3 #사용되지 않은 값 정의

reverse_word_index = dict([(value, key) for (key, value) in word_index.items()])

def decode_review(text):

return ' '.join([reverse_word_index.get(i, '?') for i in text])

# train, test data 길이 256으로 맞춰줌 (뒤에 0으로 채움)

train_data = keras.preprocessing.sequence.pad_sequences(train_data,

value=word_index["<PAD>"],

padding='post',

maxlen=256)

test_data = keras.preprocessing.sequence.pad_sequences(test_data,

value=word_index["<PAD>"],

padding='post',

maxlen=256)

# input shape is the vocabulary count used for the movie reviews (10,000 words)

vocab_size = 10000

#모델 선언

model = keras.Sequential()

model.add(keras.layers.Embedding(vocab_size, 16))

model.add(keras.layers.GlobalAveragePooling1D())

model.add(keras.layers.Dense(16, activation=tf.nn.relu))

model.add(keras.layers.Dense(1, activation=tf.nn.sigmoid))

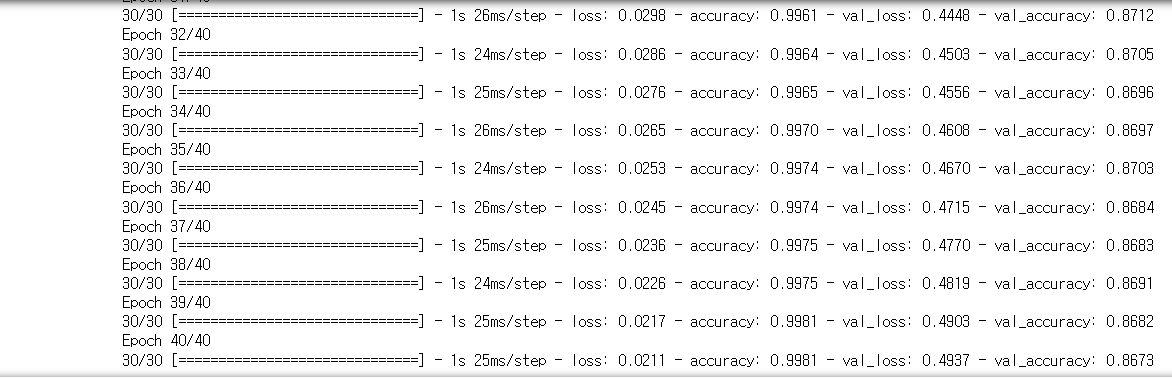

#정의한 모델 컴파일 - optimizer선언, loss값 정의, 정확도 측정 방식 결정

model.compile(optimizer='adam',loss='binary_crossentropy', metrics=['accuracy'])

#모델 평가할 test 데이터 정의 및 모델 훈련

x_val = train_data[:10000]

partial_x_train = train_data[10000:]

y_val = train_labels[:10000]

partial_y_train = train_labels[10000:]

history = model.fit(partial_x_train,partial_y_train,

epochs=40, batch_size=512,

validation_data=(x_val, y_val),verbose=1)

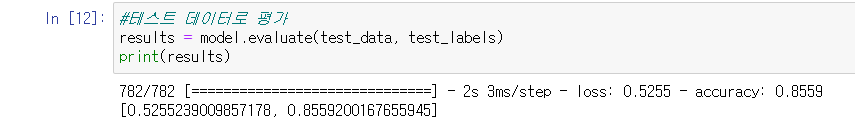

results = model.evaluate(test_data, test_labels)

print(results)

'Group Study (2022-2023) > Machine Learning' 카테고리의 다른 글

| [Machine Learning] 3주차 스터디 - CNN의 이해(3) (0) | 2022.11.01 |

|---|---|

| [Machine Learning] 3주차 스터디 - Softmax Regression (0) | 2022.10.31 |

| [Machine Learning] 2주차 스터디 - Multi variable linear regression & Logistic Regression (0) | 2022.10.10 |

| [Machine Learning] 2주차 스터디 - CNN의 이해(2) (0) | 2022.10.10 |

| [Machine Learning] 1주차 스터디 - CNN의 이해(1) (0) | 2022.10.06 |